One of the goals we set from the beginning with T.E.A.M. was to make the VR experience as natural as possible, even for users without previous experience with virtual reality headsets. In this regard, the use of the Oculus Quest headset has been almost mandatory. Besides the fact that it’s the only VR headset on the market today capable of providing a complete VR experience (with 6 degrees of freedom) without the need to be connected to an external PC, the Quest also allows – thanks to four cameras that scan and process the physical space all around – to trace the user’s hands in real time, using them as controllers directly within the VR environment. “So great!”, we said to ourselves, “We can make digital experiences without worrying about cables and external sensors, and above all we can allow the user to use their own hands!”

We realized, however, that the transition from traditional controllers to hand-tracking, with a “design and development of digital experiences” point of view, brings with it quite a few headaches. While it’s true that controllers normally have a non-trivial learning curve, it’s also true that they still offer many advantages when compared with current hand-tracking technology in VR. The time the user dedicates to learning how to use specific keys on the controller – to obtain specific poses and functionalities for their “digital hands” – is rewarded by the availability of at least three levels of feedback: keys, directional controllers, and haptic feedback (or vibration). This was in fact the first big problem we encountered. Getting rid of the controllers eliminated the perception of interaction: you no longer have anything in your hands that can replicate the feeling of holding something, nor can you feel vibrations. In other words, every interaction is completely virtual and abstract. The second problem is technical: how can we recreate the physical behavior of the objects we interact with? In the real world, this problem does not exist because we deal with the properties that rule the physical world: gravity, friction, inertia, mass, acceleration, etc. In a VR experience these properties need to be simulated in the most accurate way possible.

Here is a practical example: imagine lifting a cup in the real world. We could hold it in the palm of our hands, grasp it with two fingers on the edge, or lift it by the handle. All of these actions are possible thanks to the various forces that act upon the cup in the real world. In VR, we can apply the same principle to the 3D model of a cup: set the cup and the digital projection of our hands as “physical bodies”, enable the physical simulation, and that’s it! Unfortunately, in practice, the result leaves much to be desired.

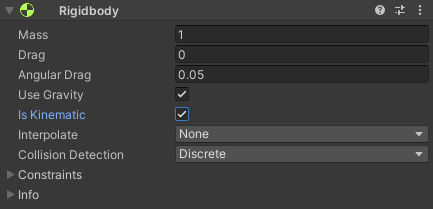

The physical simulations are in fact among the most complex operations to be computed by a processor, and subject to numerous simplifications to be manageable in real time. Consequently, the fidelity of the simulation would result in a rather poor experience compared to reality, leading to a complex and unsatisfactory interaction for the user. This does not mean that such an approach is impracticable: there are experiences for which this method works well, while others – like in the aforementioned example – would require a different approach. An alternative strategy could therefore be to disable the physical simulation of the cup with which we are interacting, right in the precise moment in which we grasp it, and make it move according to a set of pre-set movements and behaviors. This approach falls under so-called “Kinematics”. To do this in Unity (the game engine we use to build our VR experiences), we need to enable an option in the component that deals with the physical simulation of the cup.

By enabling this option, the cup stops moving according to the simulated forces acting on it (gravity, collisions, etc..) while maintaining the ability to interact with other “physical objects” and following behaviors and animations arbitrarily preset by us. The drawback is that now these animations will overwrite the “physical” behavior of the object, which can then cause it intersect with other objects.

However, it is crucial for us to maintain a relationship with the virtual objects in as realistic a way as possible. If this method can therefore represent the best solution for some VR experiences, in our case it would involve too many limitations. How to interact then with our cup? Ideally, we would need to trigger the transition from physical to kinematic mode the moment we touch the cup while simulating contact with it. There is an excellent strategy to satisfy this need: by using “gestures”. In practice, these are predefined hand poses that are recognized by the device and that can be associated with predetermined events. To achieve this, “virtual bones” are assigned to each real bone (or almost) that makes up our hand, and the tracking device is able to attribute to these virtual bones the values of position and rotation based on how we move our fingers. The moment a gesture is defined, this data is saved and will be used to identify that specific pose of our hands. During the VR experience, the tracking device reads the data regarding the fingers and, the moment it gets a match with one of the predefined gestures, the corresponding event is triggered. So, going back to our cup, rather than trying to “physically” grab it, one could think of bringing the hand closer, clenching the fist (a gesture commonly called grab, or fist) and at that point move the cup via a script or according to hand movements. At the same time, we could also change the pose of the hand by “hooking” it to the cup in a suitable way to return the perception of a correct grip.

The versatility of the gesture system doesn’t end there. It can also be used independently to activate specific features, such as teleportation – one of the most used methods of locomotion in VR experiences.

Gestures have also come to our rescue to mitigate the problem we mentioned at the beginning of this article: the lack of haptic feedback in the absence of controllers. Having your hands free allows the user to do countless actions without having to interface with a new object, but when it comes to interacting with virtual objects, the lack of something physical to grip is very noticeable. We realized this from the very first tests and an unexpected solution came from one gesture in particular: the pinch. This gesture consists of joining the tip of the index finger with that of the thumb, and the pressure it generates, even with our own fingers, gives the idea of having contact: you get the feeling of interacting with something instead of a vacuum. The opposite effect instead is what we have obtained with the grab (the gesture that consists of closing the hand like a fist), as to tighten your hand around a cup. Here the fingers close on the hand as if they were crossing the virtual cup that we would expect to touch. That’s why in Piattaforma Zero we’ve moved from an early design of a large and spherical slider, which invites the user to grasp it with the whole hand, to a smaller and squashed one, which naturally allows it to be grasped with two fingers.

Once we understood these needs and analyzed the pros and cons of different solutions to build the interactions with the objects in our projects, we decided to use an external toolkit, which is a set of basic functionalities already set up. Specifically, the choice has been the Microsoft Mixed Reality Toolkit (MRTK): an open-source package for Unity that includes many well-structured features, such as a solid framework for interactions, with support for hand tracking in the Oculus Quest. An alternative would have been to build a virtual reality framework from scratch, which we initially tried to do to understand the amount of work required to realize it. We eventually discarded this idea in favor of something already tested that would allow us to focus on VR experiences rather than the technical details of the infrastructure that supports them. We also tested other toolkits, both free and on the commercial market, but these offered solutions that were either too broad and complex or too limited for us. In the latter case, what was missing was, above all, support for hand tracking, a feature we decided to base all direct user input on. This lack is due to the fact that this feature is still very recent and, as we have seen, not fully developed, though it will probably be the standard in the future. In the meantime, we’re working to make this the standard at least in our VR experiences.

Andrea D’Angelo, January 19, 2021

Cover image

Clip from The Lawnmower Man, directed by Brett Leonard (Allied Vision, 1992)